This Startup Can Track If Your AI Code Is Worth It

Span's 'developer intelligence' software helps Ramp and Vanta track how engineers spend their time, and which AI code editors they use. Now it's raised $25M from Craft Ventures and 100+ tech execs.

The Upshot

In April, J Zac Stein took his cofounder Henry Liu out to dinner to pitch him on a new product: a model for detecting when code was generated by AI.

“I didn’t think it was going to work, but it was technically interesting,” Liu says now. Stein, the CEO of their startup Span, gloats: “He took the bait.”

If Span is successful, it’ll be a nice moment in company lore. Stein and Liu are already happy to tell it in the meantime, as their AI impact tracker launched in September starts to help customers like Braze, Intercom, Ramp and Vanta track the value they’re getting from popular AI coding tools.

“It’s becoming more important to push not only for adoption, but for efficiency,” Stein tells Upstarts. “The vision is to get more bang for your buck out of your AI transformation, and the budget you’re spending with AI.”

Founded two-plus years ago by Stein, the former chief product officer of Lattice, and Liu, the former cofounder of a startup acquired by Compass, Span does more than just track AI code. That’s a big (and growing part) of a more basic mission: helping technical leaders keep track of how their engineers are spending their time.

It’s not a new problem, but it’s one the founders argue hasn’t been truly solved in the past decade-plus of enterprise software growth. CTOs and heads of engineering still spot-check pull requests in GitHub, or export records from Jira, or lean on executive summaries from their lieutenants.

From the costs their teams spend on tools and the tracking in each one, they can stitch together a sense of productivity, too — but haphazardly, Span’s founders claim.

“When you’re at that elevation, it’s really hard to get to ground truth, and the larger your team, the harder it is to track,” says Liu. “This gives you the Cliff notes.”

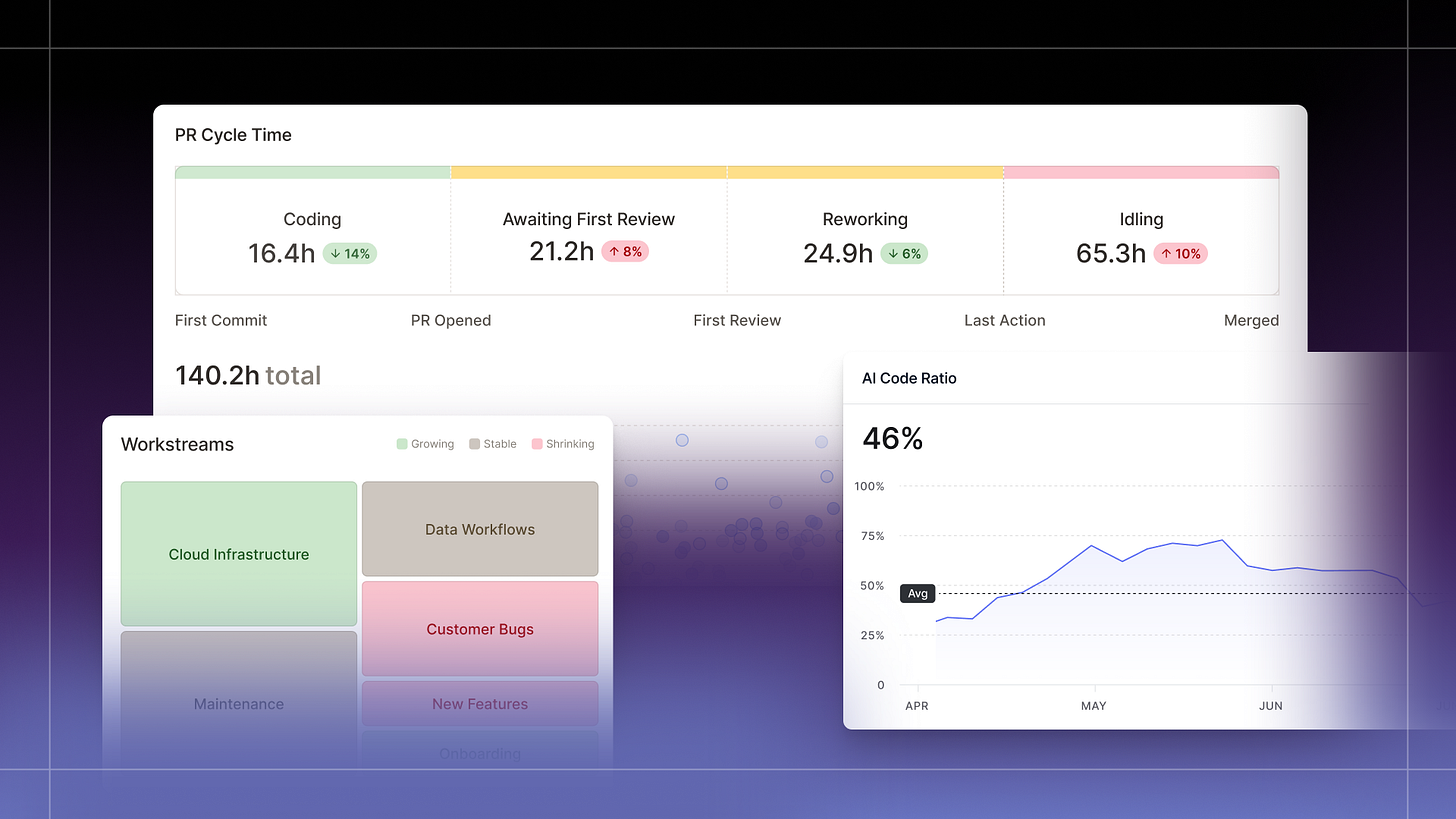

Span’s software purports to pull all that info — including AI usage scanned by its own proprietary model — into one dashboard for easier consumption. Companies can see how their resource allocation compares to peer benchmarks, and which employees are power users (or resistant) of new tools.

With such a technical problem, it’s no surprise that many of Span’s 26 employees are former founders and CTOs themselves. But after a two-plus years building, and with their AI tool in the market, Span is now ready to raise its public profile.

The San Francisco startup has raised $25 million in combined funding across seed and Series A funding rounds, Upstarts exclusively reports.

Backers include Alt Capital, Craft Ventures, SV Angel, BoxGroup, Bling Capital, plus more than 100 tech executives from companies including Notion and Rippling.

Beyond the funding, Span is of interest because of the role it can play in creating more transparency around how AI coding tools are creating (or failing to create) customer value.

At Upstarts, we’ve written about the growth strategies of AI code startups like Windsurf and Cursor, competing alongside Anthropic and OpenAI; we’ve also covered startups looking to make such code more useful, from using mathematical proofs for verifying code to even trying to set up an insurance market to make corporations more comfortable with its risks.

In software development, startups we know well like Linear and Shortcut already help businesses answer the question: what’s everybody doing? Span looks to answer a different one: how are they doing it?

“There’s a smell to AI code,” says Stein. But is it a good one? Read on below.

Take the Upstarts Audience Survey (It’s quick, I promise)

We’re eight months – and 70 stories – in at Upstarts so far. To grow our business next year, we’re working with Alltogether, a new collective of indie publications including friends at Big Technology, Platformer and Sources.

You can help by filling out this short audience survey. It’s anonymous and only takes a couple of minutes, and your answers will help us do two things: deliver news and stories that’s most relevant to you; and reach the right mission-aligned partners to support our goals.

(If you’re interested in sponsoring, you can fill out our interest form.) Thank you!

Handy spanners

Span’s founders got to know each other at Zenefits, where they were both vice presidents through some of the former unicorn’s roller coaster period nearly a decade ago.

They stayed in touch as Stein spent the next five years in executive roles at Lattice, the startup co-founded and previously led by Jack Altman; Liu co-founded a real estate infrastructure startup, Glide, eventually acquired by Compass in 2021.

Around that time, Stein started working with startups more directly himself, as a venture partner at Craft Ventures, the VC firm cofounded by David Sacks, the current Trump administration tech adviser who served as CEO of Zenefits during part of the time that Stein worked there.

An early customer meeting with another Craft portfolio startup, Vanta, eventually led Stein to join Vanta’s board as an independent director that year, too. (He’s also a board director at AI startup Writer, another customer.)

When ChatGPT took off in late 2022, Stein and Liu recognized, like many other startups, a potential sea change moment for launching new companies.

“I had spent the better part of the previous five years trying to find an excuse to enlist Henry in a startup, because he’s just that good,” Stein says. “For us, it was the chance to solve a problem we’d seen firsthand.”

That problem: scaling companies pick up more busy work, lose engineering focus and slow down as they grow. “With AI coming online, it felt like we were allowed to approach the problem with fresh eyes,” he adds.

In meetings with dozens of CTOs, the duo felt validated that they weren’t alone in finding existing tools from Atlassian and other software companies as not solving the issue. The work they might be producing was high tech, but these execs were still relying on executive summaries and status reports, or mucking around in GitHub when time permitted.

Span’s dashboards allow for more granularity with a centralized knowledge base, the startup claims. Its benchmarks for many of its tracked data points, meanwhile, help customers see if they’re drifting off course. “When people might look at what happened a quarter later, it would otherwise be too late to do anything about it,” Stein says.

Early user Intercom found value in automating its capitalization report for R&D costs, Span’s founders say; another customer, SecurityScorecard figured out it was spending much more than anticipated – more than half its resources – on maintaining old features instead of working to ship new ones.

In 11 years at car marketplace Carvana, chief product officer Dan Gill has overseen product and engineering for a company that’s grown from about 50 employees to 20,000, with hundreds of engineers. To try to keep tabs on his “decentralized” teams, Gill would export data from a bunch of sources like Atlassian’s Jira product into spreadsheets, then try to produce his own dashboards in business intelligence tool Tableau.

“The question of, ‘am I getting good ROI on my engineering,’ it’s been a hard question for business leaders to answer,” Gill says. Too many data sources and systems, or bespoke team terminology, made it all a mess.

“Span has been able to normalize and synthesize that info to be really actionable,” Gill adds. “What got us excited was the ability to leverage the exhaust of what our engineers were doing, anyway.”

Model behavior

Whether you use OpenAI, Anthropic or a startup like Cursor, code generated by AI has similar tells, Stein says: variables and comments in a certain style, a verbosity of sorts that all add up to that afore-mentioned “smell.”

Span’s bespoke model, span-detect-1, is particularly good at spotting those signals, the company claims. With it, Span is able to measure how much AI code a company’s technical staff are submitting alongside their own, and how much it needs to be edited or fixed. Span can even look back at code already in production to back-test how resilient that AI code is.

(This reminds us of what Cursor COO Jordan Topoleski told Upstarts recently about how the company tracks ‘half life’ of generated code.)

Craft Ventures’ Brian Murray, another Zenefits alum who invested in Span’s Series A, sees the startup’s software helping companies to better bifurcate their resources. For some projects or uses, AI will suffice; for others, the added cost of more skilled engineering “craftspeople” (no pun seemingly intended) will still make sense.

“Imagine CTOs with a dial to change how much they’re spending on salary versus tokens,” Murray says.

Across the six code editors it currently tracks for customers, Span has already seen the percentage of code they’ve generated with AI grow this year from from 13% to 38%. But at a cost: “We found that even though overall throughput is going up, each individual PR unit of code takes longer to ship,” Liu adds.

Span’s founders say that data suggests that customers should invest more in clear code review guidelines and review tools. That perch is part of what makes Span interesting to Altman, who wrote the startup its first check: “It’s tooling to understand the market that is probably most impacted by AI so far,” he tells Upstarts via text.

But it also points to a potential tension point for Span in the future: if customer’s engineering leaders fall out of love with the code editors, they might be tempted to shoot the messenger, too.

Of course if adoption continues to increase, Span can benefit from both sides, giving customers peace of mind and helping the AI companies sell faster.

That would invite more competition. Stein says he’s not worried, at least for now: “Everyone else is hand-waving.”

I think one very interesting use case / the way to position their product is the ability to see the % of code generated by AI vs. manually created by an engineer, juxtapositions against that system’s performance benchmarks.

This would enable IT exec to not only get that level of visibility, but to also craft the internal use case using these benchmarks to estimate ROI and drive better visibility into the quality of work their teams do. Especially if the “hands on keyboards” approach turns out to be more high yielding that another flashy “vibe code” tool.

Love this!